They say one of the skills conversational AIs handle best is languages. After tinkering with ChatGPT for a while, I couldn’t agree more. So I wondered if there were tools that could help me with text translations using files with structured content like PO files.

Files with the .PO extension allow us to manage WordPress translations, its themes, extensions, as well as other applications and tools. When looking for PO file translation tools that used ChatGPT, I found Gpt-po by ryanhex53. It served to see the potential and prompted me to develop my own tool to thoroughly test the different options of the OpenAI API.

After conducting tests, the result was quite a surprise.

I approached this project as an experiment to assist in translation during the WordPress collaboration sessions of Wetopi, our WordPress Managed Hosting, within the “Five for the Future” framework that we carry out among friends on Fridays. The result, gptpo is available for you to use, discard, dismantle, refactor… And if you feel like collaborating to improve it, your help will always be welcome.

Preliminary considerations before using ChatGPT

We will use OpenAI’s API to translate with ChatGPT. This API works with its own USD wallet, and its use is charged through tokens. In its free version, the use of tokens is limited.

This article is quite dense and it is convenient to clarify certain aspects or concepts beforehand. The list below will allow us to move more fluidly through the article and will serve as a reference in case of doubt:

- Token: the smallest unit into which OpenAI’s language models (GPTs) divide a word to then be able to charge for it. It is not uniform across all ChatGPT models, nor does the same word always represent the same number of tokens in the same model.

- Cost: Translating with the OpenAI API has a cost per token. This can influence the ChatGPT model we want to use.

- ChatGPT uses a language model created by OpenAI. The API we will use is OpenAI’s own.

- gptpo is a command-line executable application designed to manage text translations of files in PO format, using GPT (Generative Pre-trained Transformer) artificial intelligence language models.

If you like this article, you’ll fall in love with our support

Join a hosting with principles

Includes free development servers- No credit card required

Translating with “Chat Completions API”

Chat Completions API is one of the ways offered by OpenAI’s API to access OpenAI’s ChatGPT. To conduct experiments, I found on GitHub the application “gpt-po“, a command-line application developed in JavaScript. “gpt-po” connects to OpenAI’s ChatGPT and makes calls using the “Chat Completion” process. It’s similar to opening a chat, providing instructions for it to take on the role of translator (“prompting”), and sending one by one the messages from the PO file for translation. The process is described step by step below.

Everything pointed towards success, towards a revolution…, but the result were inaccurate, inconsistent translations with serious errors:

- Some translations were interpreted directly, and some in question format were directly answered, skipping the instructions.

- It did not respect punctuation at the end of sentences, much less the white spaces.

- Impossible to find consistent results over time.

- Moreover, the translations it made from English to Catalan, in the WordPress community, are subject to a number of rules. We even have a Glossary that imposes certain rigidity and that ChatGPT at the time was not able to interpret.

- I also could not exceed the length of the “prompt”, since the “Chat completion” mode requires you to send the “prompt” for each question (in our case each message to translate). The longer the prompt, the slower the process became and the more expensive the translation costs.

After dealing with various prompts and different models (gpt-3.5-turbo, gpt-4-1106-preview) I abandoned my experiments.

GPT Assistants – A Major Advance!

In November 2023, during the OpenAI Devday event, Assistants were introduced, a new way of contextualizing work with ChatGPT. Access to these Assistants is made through an application programming interface (API).

A major advance that could help solve the numerous problems I had encountered so far.

In short, with Assistants, you can adjust a prompt and then recycle it throughout the chat. And very useful, you can attach files to enrich the prompt and the subsequent process.

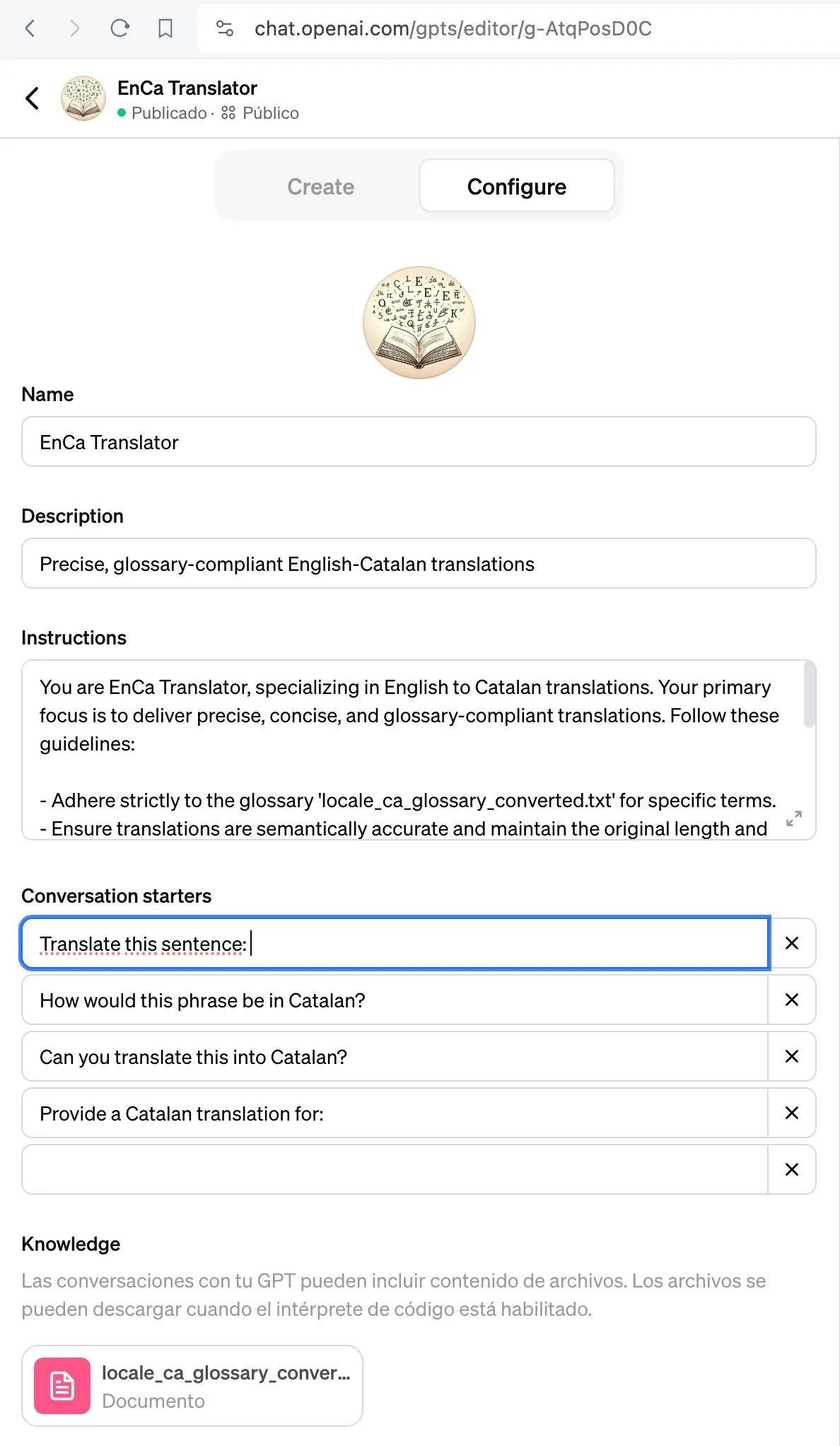

The first thing I did was to build an Assistant in the OpenAI panel itself.

I gave it a good list of instructions and attached a Glossary in text format for it to use to supervise its own translations.

And the results, much better.

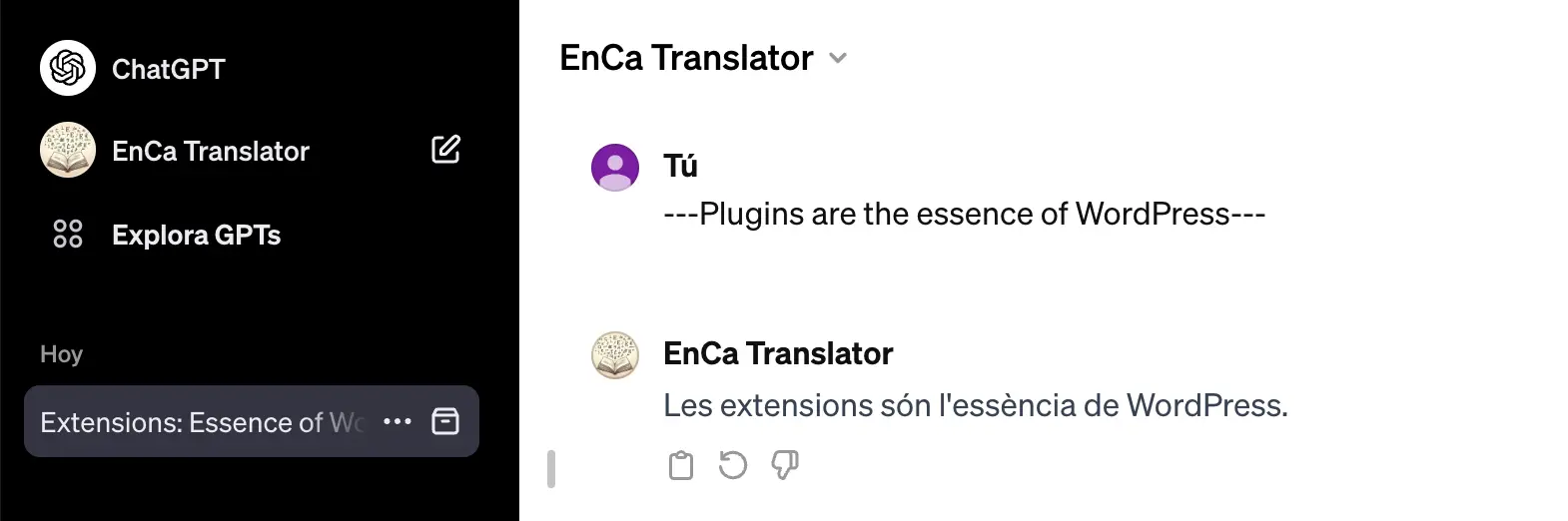

The resulting “Assistant” is published and you can try it at this link https://chat.openai.com/g/g-AtqPosD0C-enca-translator

Using OpenAI Assistants to translate PO files

After the results obtained with the Assistant, I regained hope, so I set out to incorporate this new modality into the gpt-po translator.

I made a copy on GitHub of the translator, well a fork called “gptpo“, and soon after, I had incorporated “Assistants” to test it against a PO file and a good volume of messages.

Evaluating the translation of PO files with Assistant

After evaluating the translation result, the assessment of using Assistant was: “not bad”, it could be useful.

Indeed: it was not a bad result, it was an advance, but I encountered:

- Inaccuracies, many inaccuracies and inconsistencies. Again, spaces and punctuation marks at the end of the string were not respected.

- It did not always follow the glossary rules.

- Slowness. To translate 300 strings (with a volume of about 2900 tokens) it took almost 1 hour. Perhaps it was the use of the

gpt-4-1106-previewmodel, or the added load of supervising translations to follow the glossary rules I attached as a file. - Costs skyrocketed. The tokens with the

gpt-4model are 20 times more expensive. However, the fact of not sending the prompt every time and being able to preserve the context (thread) I thought could help. The result did not seem to agree with my theory, the context window became very large. To generate 2909 tokens of translation it needed 169546 tokens of context:

Again the result seemed far from what I expected. Perhaps I missed some detail of implementation. Something seemed not right, and I set out to thoroughly reread the API manuals.

Better translations with Fine-Tuning of ChatGPT

I don’t know how I overlooked it, the fine-tuning seemed instantly the interesting option to try to narrow down the inaccuracies and especially to give specific instructions to the model.

Fine-tuning allows adjusting and improving the model’s performance specifically for a particular task, which helps reduce inaccuracies and provide precise instructions.

Specifically, ChatGPT’s fine-tuning is a process in which the ChatGPT artificial intelligence model is adjusted and customized to better fit a specific set of data or needs. It’s like teaching ChatGPT to be better in a specific area through practice with relevant examples.

In the Documentation, in the fine-tuning section it says:

“Fine-tuning” or “tuning” of OpenAI’s models can make them better for specific applications, but requires careful investment of time and effort.

With excitement, I didn’t consider the investment of time and effort as something important, so I decided to tune a model without thinking much about it.

You can find detailed information in the OpenAI documentation, and also later, in this same post, a step-by-step process.

Improving “gptpo” with ChatGPT’s “fine-tuning”

To facilitate the process, I added the commands for “fine-tuning” to the “gptpo” application. This way, with “gptpo”, we can fine-tune a model, to then use it in the translation of PO files.

gptpo is a command-line executable application that uses the Node.js engine, and is designed to manage PO file translations using OpenAI’s language models through its API.

Install gptpo to manage translations

If you don’t have Node.js on your computer. On the official page, you will find the installers: https://nodejs.org/en/download

Once we have Node.js, we can install the gptpo application with the command:

sudo npm install -g @sitamet/gptpoFor more details, you can consult the “Readme” of the GitHub repository: https://github.com/sitamet/gptpo

From now on, you have the gpto command at your disposal. Verify that everything has gone well by testing it with a simple gptpo --help

~ » gptpo --help

Usage: @sitamet/gptpo [options] [command]

Translation command line tool for gettext (po) files that supports pre-translation and chat-gpt translations with assistant and fine tuning of models.

Options:

-V, --version output the version number

-h, --help display help for command

Commands:

translate [options] translate po file with completions (default command)

translate-assistant [options] translate po file with assistant

fine-tuning [options] launch a new fine-tune task to customize your OpenAI model

fine-tuning-jobs [options] list fine-tune jobs

preprocess [options] update po from previous translated po files (origin po will incorporate translations from previous po files)

help [command] display help for commandYou will need an OpenAI API Key

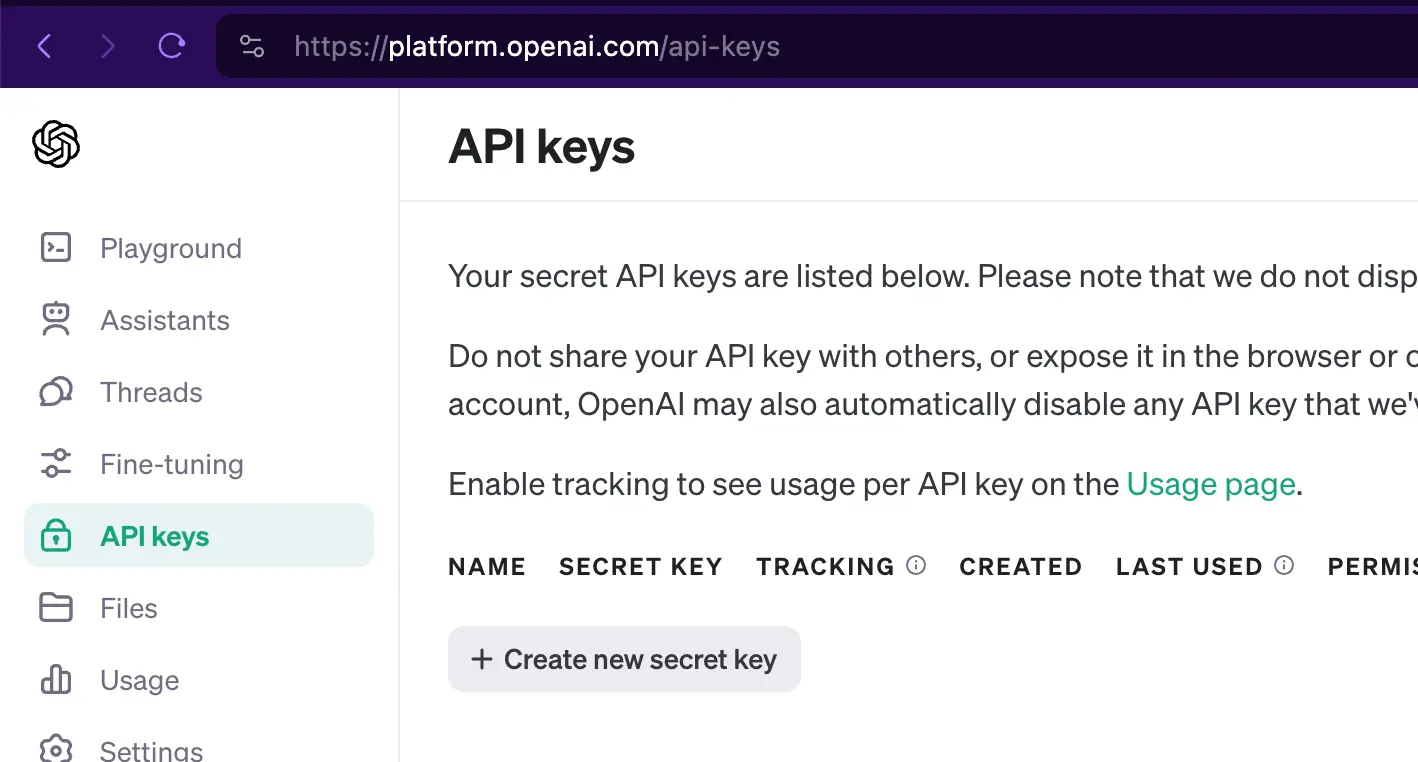

To use gptpo and make translations using OpenAI’s API, it is necessary to authorize the calls with a unique key. To get the API Key, go to https://platform.openai.com/api-keys

Log in and then on the API keys page use the “+ Create new secret key” button:

Each time we use gptpo to translate, we will have to provide our API key. To avoid having to “drag” the API key in all the command calls, we will save it in an environment variable:

export OPENAI_API_KEY=sk-F***********************************vGLImprove in Security, Performance & Support

Jump to a

Managed WordPress Hosting

Translate a PO file with OpenAI’s models

Although we will end up translating with a “tuned” model, we will start using one of OpenAI’s standard models. The idea is to collect all the errors that the “model” makes to then adjust it through “fine-tuning”.

OpenAI sets different limits and prices for each of its models.

More information about available models at https://platform.openai.com/docs/models/overview

In my case, I opted to use gpt-3.5-turbo-1106, for two reasons:

- The goal is to be able to translate large volumes of strings without having to pay a lot for it.

- By using it with fine-tuning and with not a very wide context size, 3.5-turbo-1106 could match the results of gpt-4 and even be faster.

Although this scenario may change as OpenAI delivers new models.

Save the model in environment variable

Just like with the API Key, to not drag the model in the command calls, we save it in an environment variable:

export OPENAI_API_MODEL=gpt-3.5-turbo-1106Define a concise “system prompt”

It’s important to note that, unlike the “system prompt” we would build using a standard model, that is, a refined, extensive detailed prompt… as we are going to work with “fine-tuning”, we are going to use a concise “system prompt”. And we will take care of polishing and shaping the responses using the tuning or “fine-tuning”.

We will save the text of our “system prompt” in systemprompt.txt in the working directory from where we are going to launch the gptpo command:

cd demo

echo 'You are a machine translating English to Catalan text.' > systemprompt.txtTranslate a PO file with gptpo

If we want to translate the text strings from `test.po`, as we already have the environment variables defined, the command to launch is relatively simple:

gptpo translate --po ./test.po --verbose

░░░░░░░░░░░░░░░░░░░░ 0% 0/1

==> The plugin will load as a regular plugin

==> El plugin se cargará como un plugin regular.

████████████████████ 100% 1/1 done.The --verbose parameter shows on the fly the strings it is translating

Translate a PO file with a trained model

When translating strings using the standard model and a brief prompt, we will come across a lot of cases that we will have to correct. It’s time to profile the model so that it responds appropriately.

Fine-tuning consists of providing a set of “inputs” and “desired responses”, so that the model adjusts to our specifications

More information at: https://platform.openai.com/docs/guides/fine-tuning

Fine-tuning with the help of gptpo

Although you have a specific section on OpenAI’s website to work on fine-tuning, I decided to incorporate the commands into the gptpo application so I could operate from the command line. Later we will see how to use it.

The first step for fine-tuning consists of preparing the JSONL type file with all the messages to use to adjust the model.

Building a dataset to fine-tune the model

The training consists of sending a dataset where we put the text to translate and the result we want, a finite number of times, covering all cases where the model does not respond to the result we want.

To build the data model or “dataset”, you have detailed information on how to prepare your “dataset” in this section of the documentation. But it is not complicated, later you will see it clear with the example we show you.

Things to keep in mind:

- The file is written in a specific JSON Lines format that we must respect.

- The prompt will act as a “trigger”. It’s like that phrase hypnotists use to take control of the hypnotized’s mind. We will choose a short, meaningful phrase and always repeat it the same way.

- Each line in the file is a message, where we will find sections labeled by “role”.

- We have 3 roles:

- system: here goes the “prompt” and we will always indicate the same phrase.

- “user”: here goes directly the text that we want to translate,

- “assistant”: here comes the training because we will indicate exactly how the translation should be.

- Do not limit yourself to a few messages. The documentation mentions a minimum of 10. In my case, I sent around 150. The purpose is to reinforce the model with well-made examples and emphasizing all cases where ChatGPT makes mistakes and inaccuracies.

- Use the chat with its standard model to see where errors occur and work specifically with those errors to profile the model.

To build the training file, with the help of a text editor, prepare a text file and build what would be a mold. This way you can focus on the “training”, as you will only have to fill in the holes with “user” and “assistant” messages:

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": ""}, {"role": "assistant", "content": ""}]}

...Next, with patience and precision, we will go line by line inserting the “content” field of “user” and “assistant”:

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": "File does not exist."}, {"role": "assistant", "content": "El archivo no existe."}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": "Skipping malware scan "}, {"role": "assistant", "content": "Omitiendo el escaneo de malware "}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": "Stop Ignoring"}, {"role": "assistant", "content": "Dejar de ignorar"}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": "No IPs blocked yet."}, {"role": "assistant", "content": "Todavía no hay IPs bloqueadas."}]}

{"messages": [{"role": "system", "content": "You are a machine translating English to Catalan text."}, {"role": "user", "content": "Error Activating 2FA"}, {"role": "assistant", "content": "Error al activar el 2FA"}]}

...Launching a training task

To tell OpenAI to “train” the model with the messages from fine-tuning-ca.jsonl we will indicate:

gptpo fine-tuning --suffix cat01 --file ./fine-tuning-ca.jsonThe --suffix will help us version the resulting model. If later we train previously trained models, versioning will help us organize the resulting collection of models.

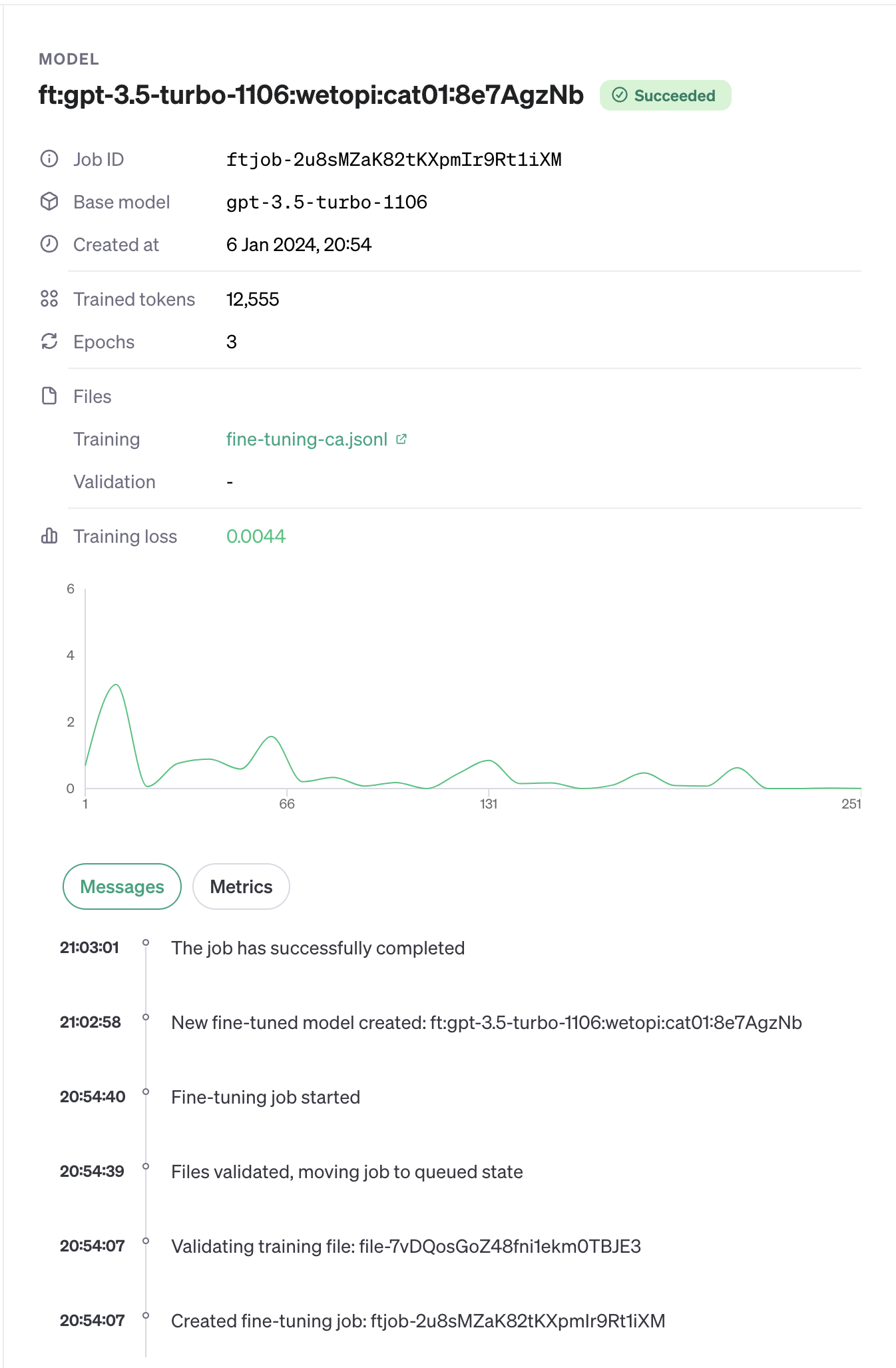

Seeing the training status of my model

After executing the training command, OpenAI queues it and notifies you by email after a few minutes

With the help of gptpo we can list the models and see the training status as follows:

gptpo fine-tuning-jobs

[

{

object: 'fine_tuning.job',

id: 'ftjob-2u8sMZaK82tKXpmIr9Rt1iXM',

model: 'gpt-3.5-turbo-1106',

created_at: 1704570847,

finished_at: 1704571377,

fine_tuned_model: 'ft:gpt-3.5-turbo-1106:wetopi:cat01:8e7AgzNb',

organization_id: 'org-iMMKhtTcRklst7guwU9LzPr6',

result_files: [ 'file-bg27iX6hYBIN2rCtDlkkZon6' ],

status: 'succeeded',

validation_file: null,

training_file: 'file-7vDQosGoZ48fni1ekm0TBJE3',

hyperparameters: { n_epochs: 3, batch_size: 1, learning_rate_multiplier: 2 },

trained_tokens: 12555,

error: null

}

]The result is a JSON object. Among the relevant data we have:

- model: the input model we want to adjust.

- status: indicates the state of the fine-tuning process.

- fine_tuned_model: the name of the model resulting from the fine-tuning.

Remember that you can continue adjusting a previously trained model.

If the translation results still show inaccuracies and errors, send a new dataset to the already trained model to continue fine-tuning it.

In the OpenAI control panel, we can also see the result of the model training:

Translate a po file with gptpo using a trained model

We now have a trained model to which OpenAI has given the name of ft:gpt-3.5-turbo-1106:wetopi:cat01:8e7AgzNb

The command we will use in gptpo does not change. That said, we will have to tell it the new model. For this, we can pass the name of the new model as a parameter --model=ft:gpt… or, as we have done previously, save it in the OPENAI_API_MODEL environment variable

export OPENAI_API_MODEL=ft:gpt-3.5-turbo-1106:wetopi:cat01:8e7AgzNbAnd launch the gptpo translate command as before

cd demo

gptpo translate --po ./test.po --verbose

░░░░░░░░░░░░░░░░░░░░ 0% 0/1

==> The plugin will load as a regular plugin

==> El complemento se cargará como un complemento regular.

████████████████████ 100% 1/1 done.Evaluating the translation result

After training the model with a total of 145 carefully chosen messages, I evaluated the “tuned” model by launching the translation of 1050 strings.

The final evaluation is very positive. The adjusted model is capable of focusing on the tone; it’s strict in terms of respecting the code, punctuations, white spaces at the end of the text.

Compared to the standard model, the result is surprising. The translation is almost perfect in semantics, tone, and person used. However, it is still essential to review the result. In some cases, it invents terms or misrepresents technical concepts. In my case, I retrained the model several times to adjust the errors.

Cost analysis

The result is good, but at what cost?

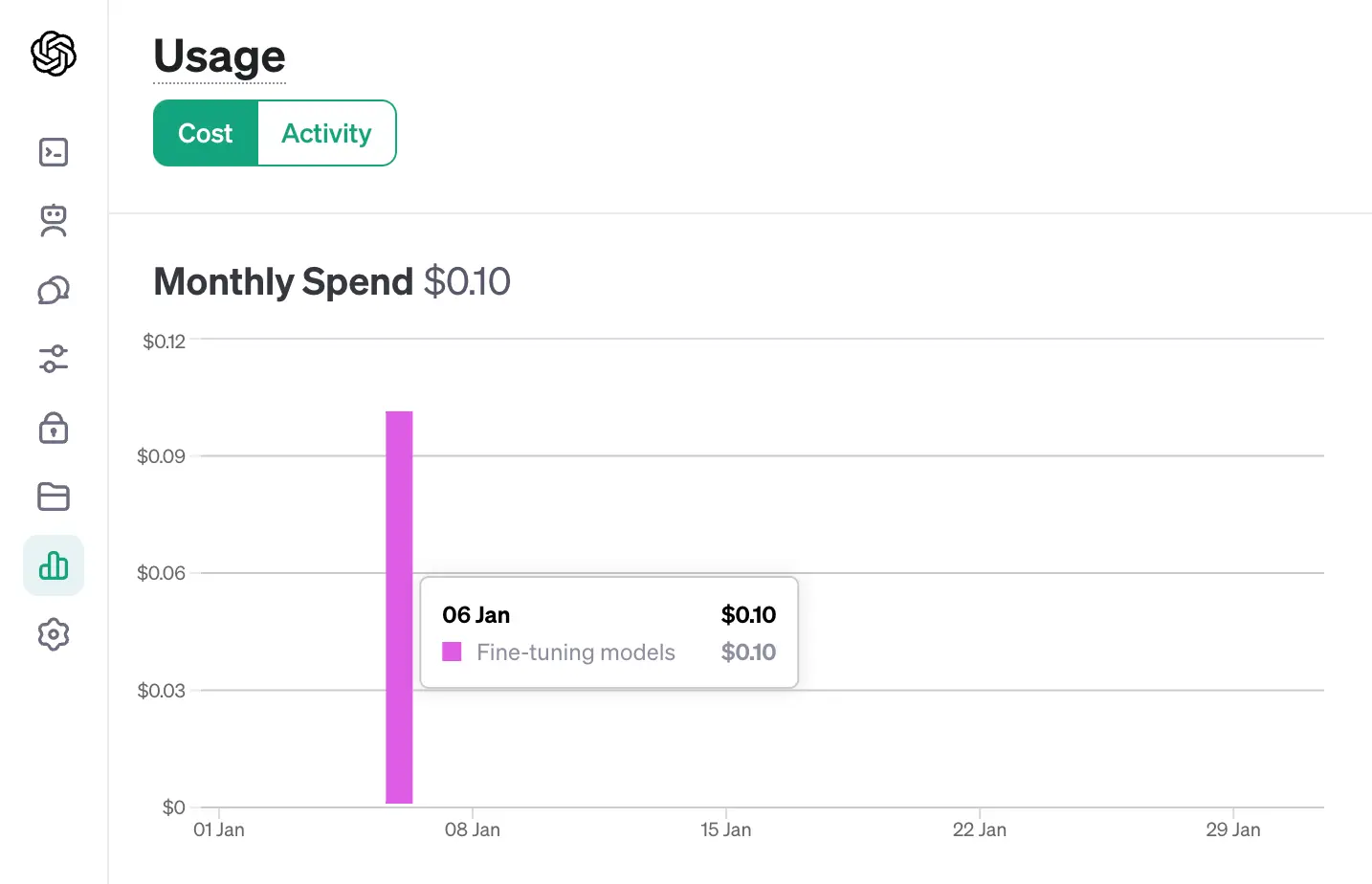

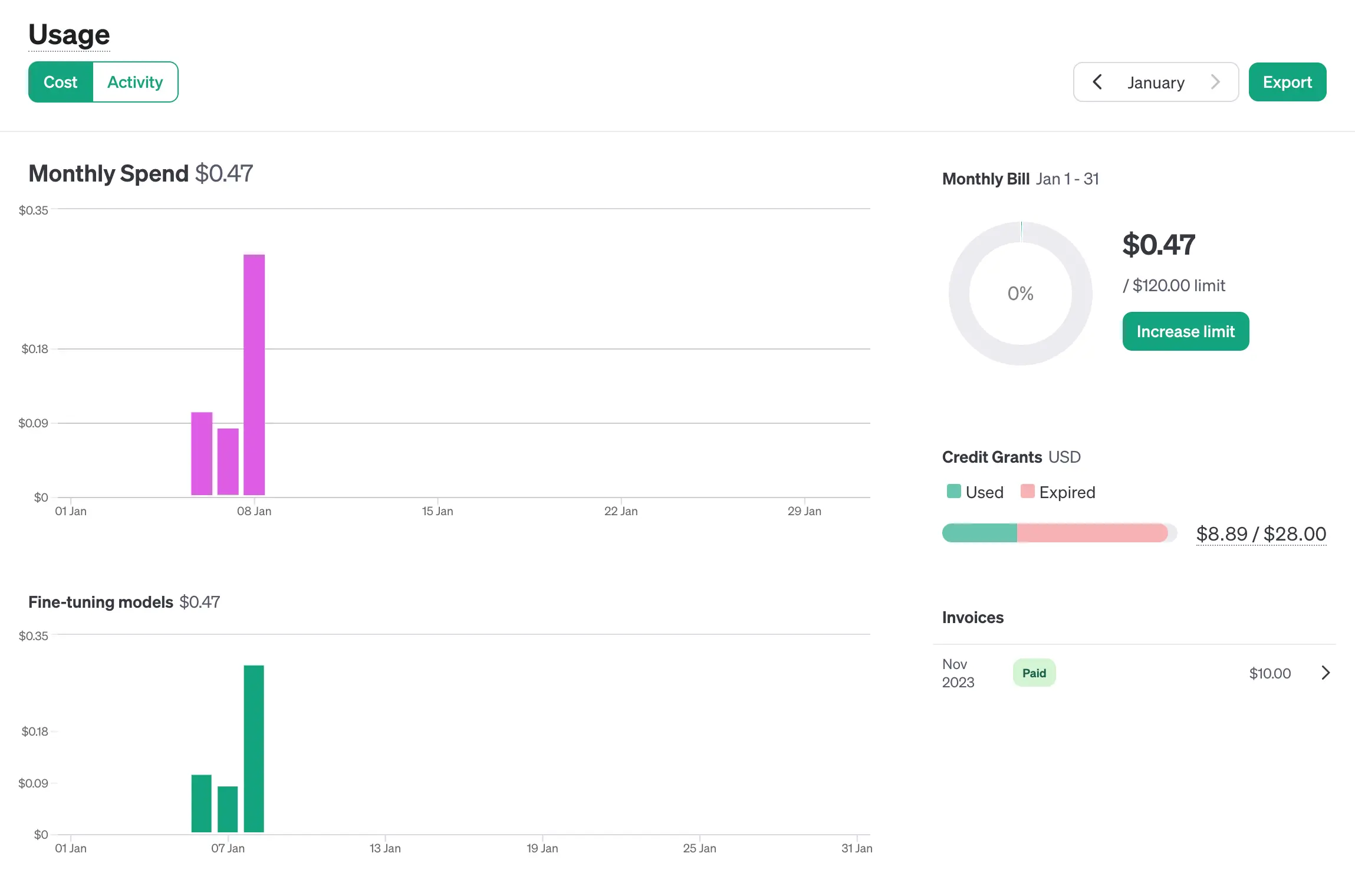

The fine-tuning of 145 messages consumed a total of 12,555 tokens which represented a cost of $0.10

For the translation of the 1,050 messages, 87,418 tokens were used costing $0.37 (I’ve discounted the fine-tuning of the day 6/1)

Translating PO files from previously translated texts

Apart from being able to obtain good translations, one of the most desired functionalities is being able to take advantage of translations previously made and validated. Here, the gptpo command will also be of great help.

In your working directory, create a subdirectory and call it, for example, previous. Inside, save all the “po” files that may be related to the theme you are addressing in your translations.

gptpo will try to fill all pending translations with the matches it can find in all files:

cd demo

gptpo preprocess --po my-plugin.po --prev ./previous

Preprocessing PO file: my-plugin.po

· Get translations from: previous/wordpress-tm.po

· Get translations from: previous/wordpress_plugins-tm.po

· Preprocessed 2 files, translated 31 messages.

Don’t you have an account on Wetopi?

Free full performance servers for your development and test.

No credit card required.

This might also interest you: